Tuesday, November 14th

Tuesday was the first full day of SC23, and Groqsters were busy hosting client meetings in the Groq VIP lounge along with three live presentations in our booth.

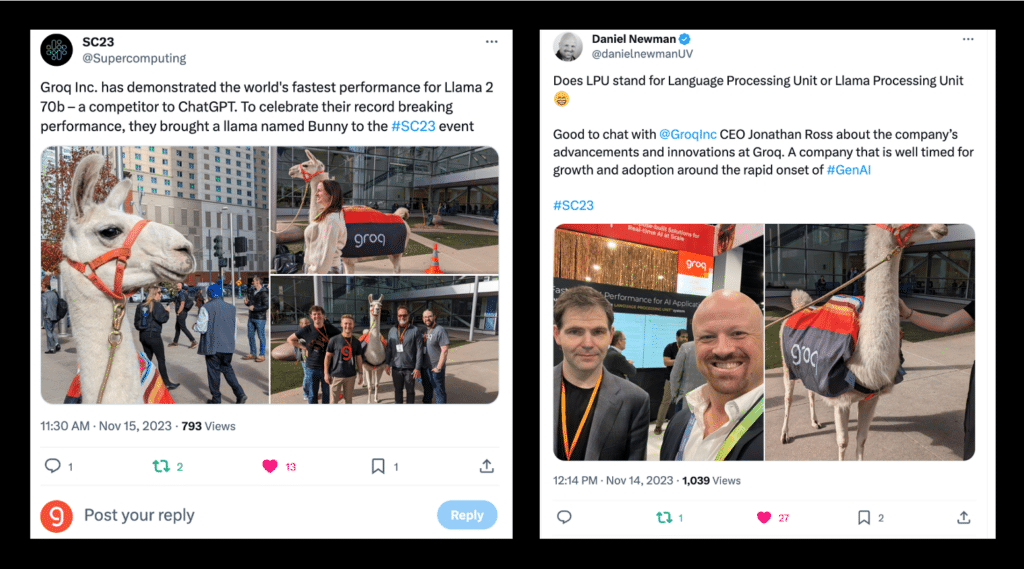

Our CEO and Founder, Jonathan Ross, talked with industry analysts including Daniel Newman and Patrick Moorhead. We interviewed Argonne Principal Computer Scientist Nicholas Schwarz and hosted Murali Emani, Computer Scientist who covered the Argonne Leadership Computing Facility (ALCF) AI Testbed program.

Oh, and yes, we absolutely showcased a real live Groq llama outside the convention center celebrating of all our success running Llama-2 70B on our LPU™ Inference Engine at record-breaking speeds! “Bunny” from Front Range Llamas was the star of the show, starring as the opener in HPCWire’s Daily Recap video. Now, let’s get into some insights…

Customers

After a full day of customer and partner meetings, a clear trend emerged: industry leaders know they need to diversify their AI inference solution portfolio. Yes, most of them are stacked with the best CPUs and Graphics Processors the market can offer for training. But those legacy solutions come with supply, power, and latency concerns that will not serve the explosion of AI applications for inference at enterprise scale.

Groq is showing AI leaders what they need to unlock the full potential of enterprise-scale inference. Our LPU™ Inference Engine is the missing piece in their solution portfolio, offering 10x lower latency and 10x more power efficiency at 10x lower cost. They understand that what got us here won’t take us forward.

Michelle Donnelly, Chief Revenue Officer, Groq Tweet

Science & Research

After speaking with various scientists from our customer Argonne National Laboratory, the future of AI-powered science and research using the LPU Inference Engine really does feel limitless. You can read Argonne’s latest article on how Groq will help accelerate data-intensive experimental research as part of the ALCF AI Testbed.

The massive amounts of data produced by scientific instruments such as light sources, telescopes, detectors, particle accelerators, and sensors create a natural environment for AI technologies to accelerate time to insights. The addition of Groq systems means faster AI-inference-for-science, which in turn means more tightly integrated scientific resources.

Venkatram Vishwanath, ALCF Data Science Team Lead, Argonne National Lab Tweet

If you are interested in leveraging Groq in your science and research, you can register to attend the virtual Groq AI Workshop on December 6th and 7th hosted by the ALCF.

SC23 Continues

The fun isn’t over yet! Groq continues to show live demos of Llama-2 70B running on our LPU & we’re hosting more in-booth sessions (#1681) on Wednesday, November 15th. Add the following sessions to your calendar.

- 10-10:30am | The Future of Silicon Design with AI: Design Space Exploration

- 1-1:30pm | Converged Computed: AI-accelerated Seismic & CFD

- 3-3:45pm | Record-setting LLM Performance: 300+ Tokens/s per User on an LPU™ Inference Engine