Llama 3 Now Available to Developers via GroqChat and GroqCloud™

Here’s what’s happened in the last 36 hours:

- April 18th, Noon: Meta releases versions of its latest Large Language Model (LLM), Llama 3.

- April 19th, Midnight: Groq releases Llama 3 8B (8k) and 70B (4k, 8k) running on its LPU™ Inference Engine, available to the developer community via groq.com and the GroqCloud™ Console.

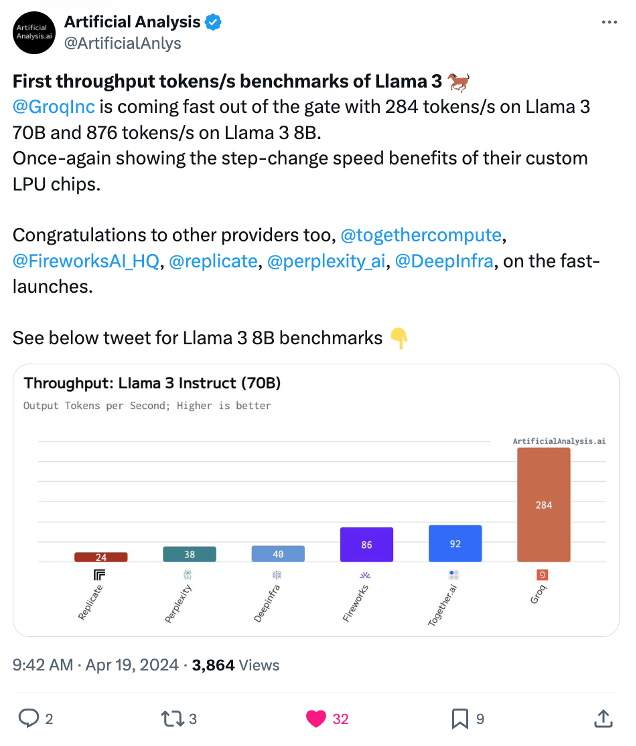

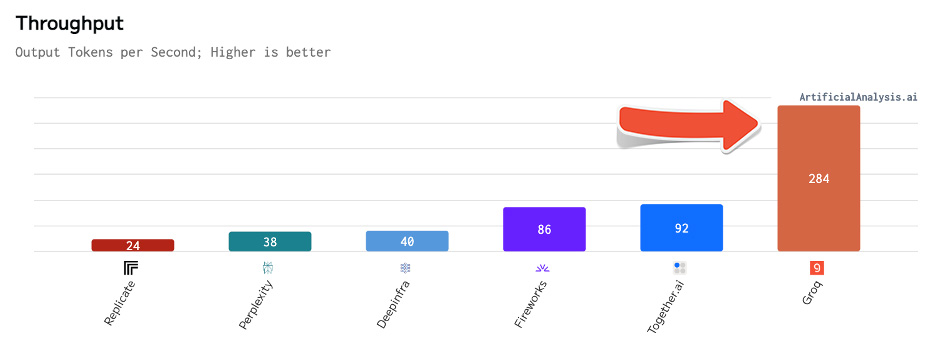

- April 19th, 10am: ArtificialAnalysis.ai releases its first set of Llama 3 benchmarks.

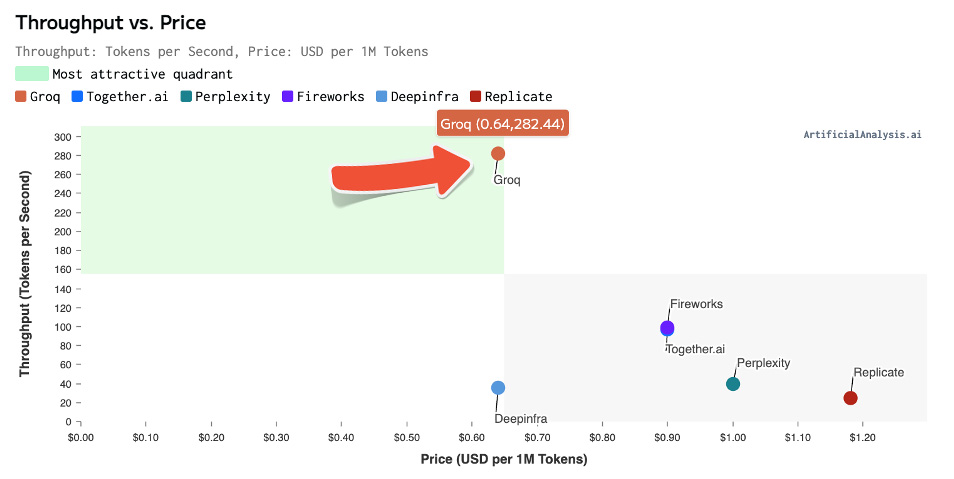

Artificial Analysis has independently benchmarked Groq as achieving a throughput of 877 tokens/s on Llama 3 8B and 284 tokens/s on Llama 3 70B, the highest of any provider by over 2X. Groq's offer is also cost competitive with both models priced at or below other providers. Combined with Llama 3's impressive quality, Groq's offer is compelling for a broad range of use-cases including emerging use-cases which demand a high number of interactions with LLMs such as AI agents.

George Cameron, Co-Founder, ArtificialAnalysis.ai Reshare

Throughput

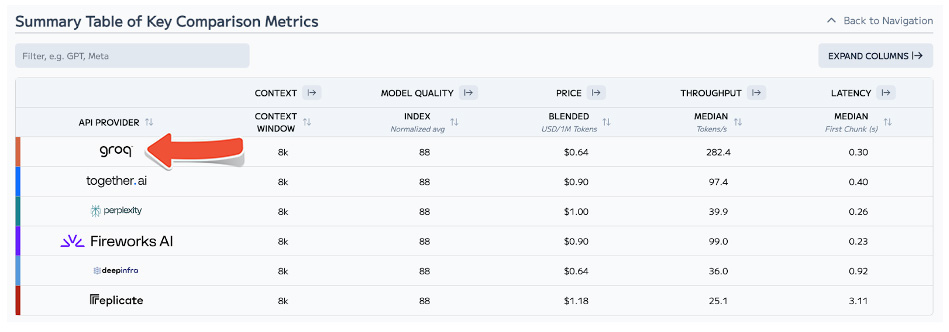

Groq offers 284 tokens per second for Llama 3 70B, over 3-11x faster than other providers.

Throughput vs. Price

While ArtificialAnalysis.ai used a mixed price (input/output) of $0.64 per 1M tokens Groq currently offers Llama 3 70B at a price of $0.59 (input) and $0.79 (output) per 1M tokens.

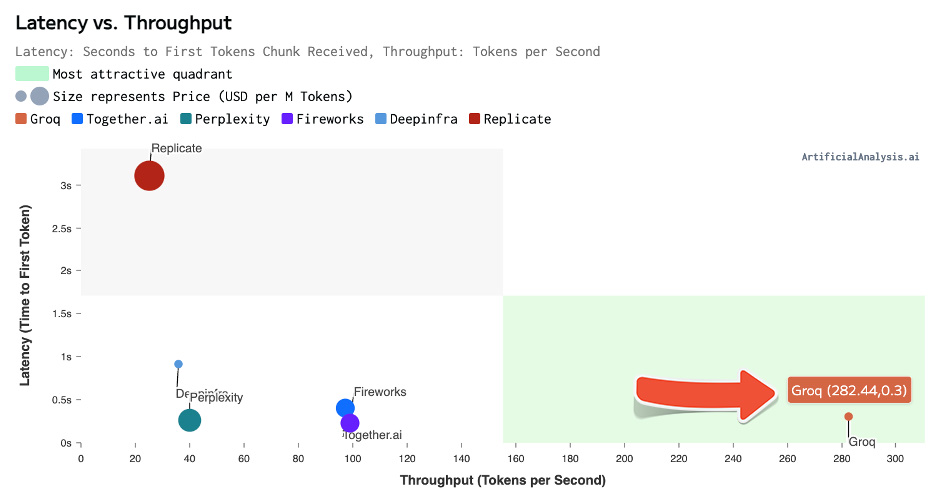

Latency vs. Throughput

For latency, measured in seconds to first tokens chunk received, versus throughput, measured in tokens per second, Groq comes in at 0.3 seconds versus 282 tokens per second.

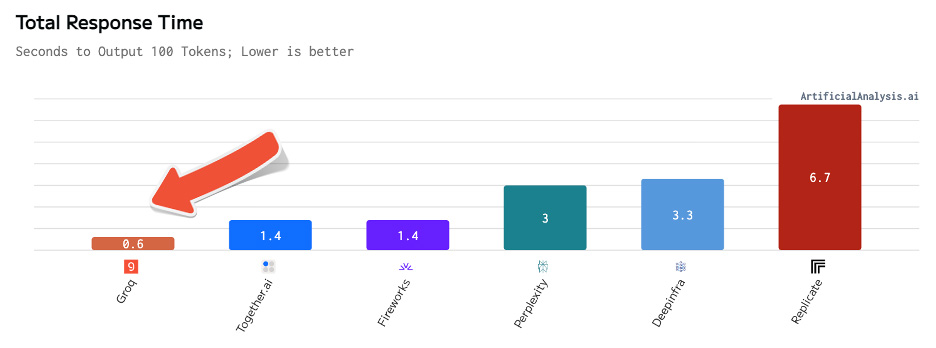

Total Response Time

Measured as the time to receive 100 tokens output, calculated by latency and throughput metrics, Groq clocks in at 0.6 seconds.

Summary Table of Key Comparison Metrics

Thank You to Our Developer Community!

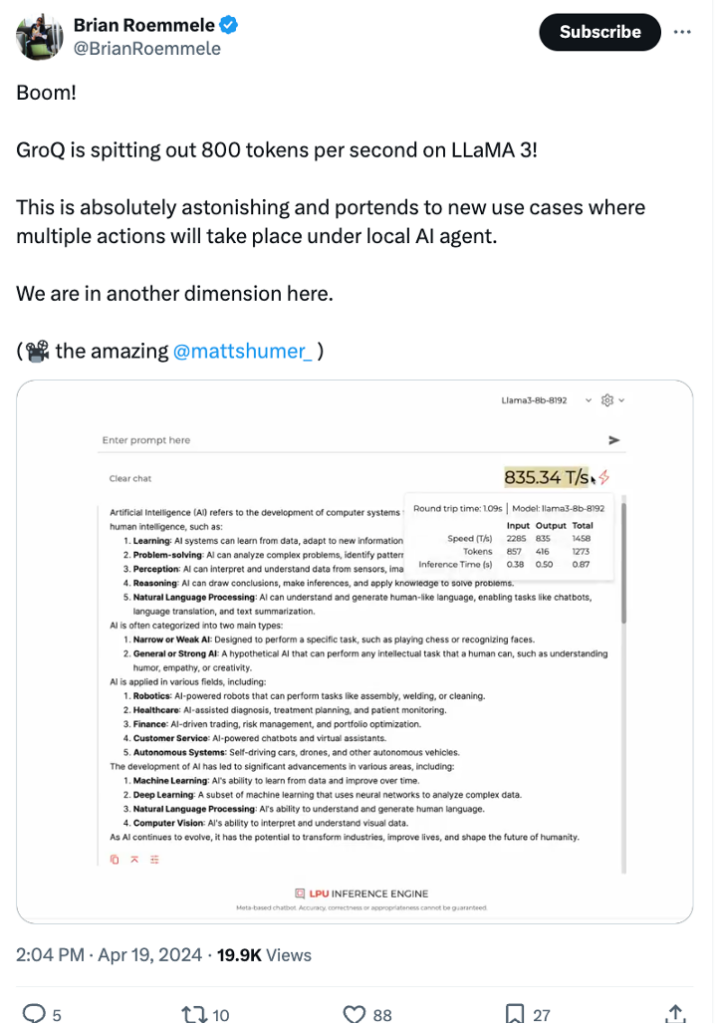

We’re already starting to see a number of members from our developer community sharing reactions, applications, and side-by-side comparisons. Check out our X page to see more examples, join our Discord community, and play for yourself on GroqCloud™ Console.

Special shoutouts to those captured here: